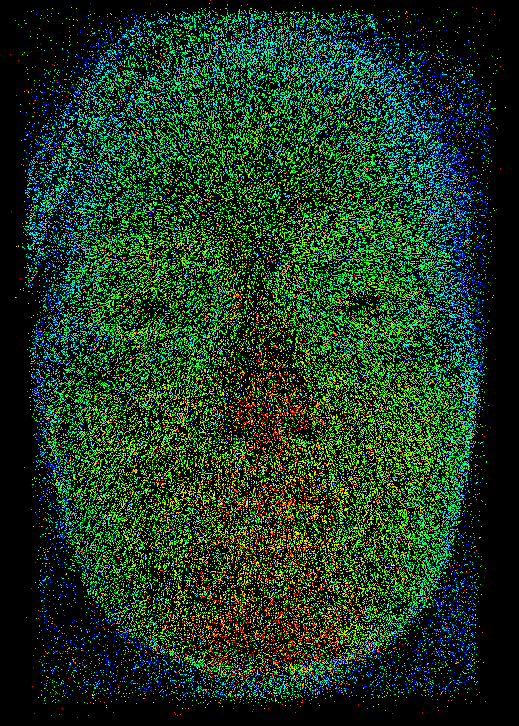

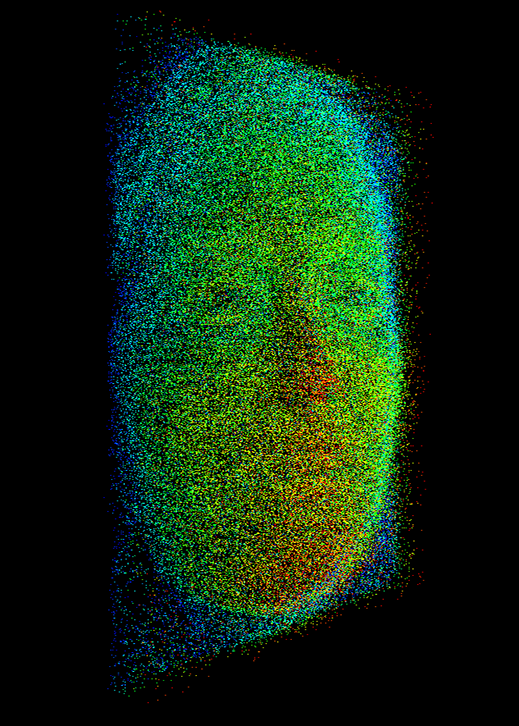

Our algorithms have been applied to two public datasets from Raytrix (Watch and Andrea). This datasets contains all the information necessary in order to obtain the depth of a scene, including the raw images, the depth images and the calibration data from the camera. The following images (Watch dataset) represent the raw images captured by the camera. The first one is the "real" raw image and the second one is the processed raw image.

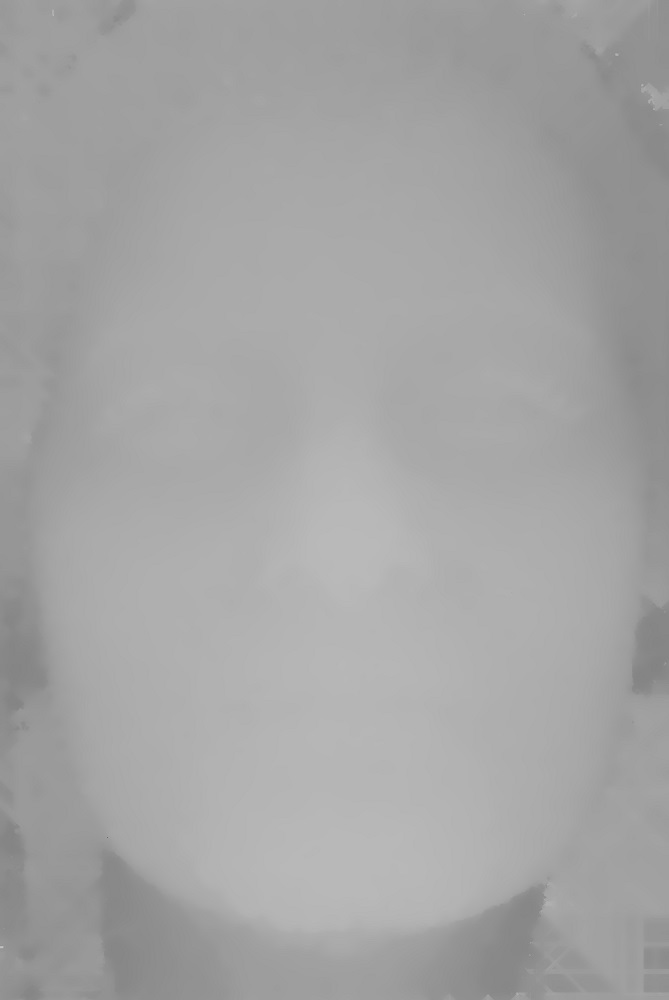

We also present the all-in-focus image, which is obtained after the process of depth estimation. The depth estimation of a scene and the all-in-focus images, as well as other features, can be obtained using the software provided by Raytrix, but our work is to obtain the same images without using it.

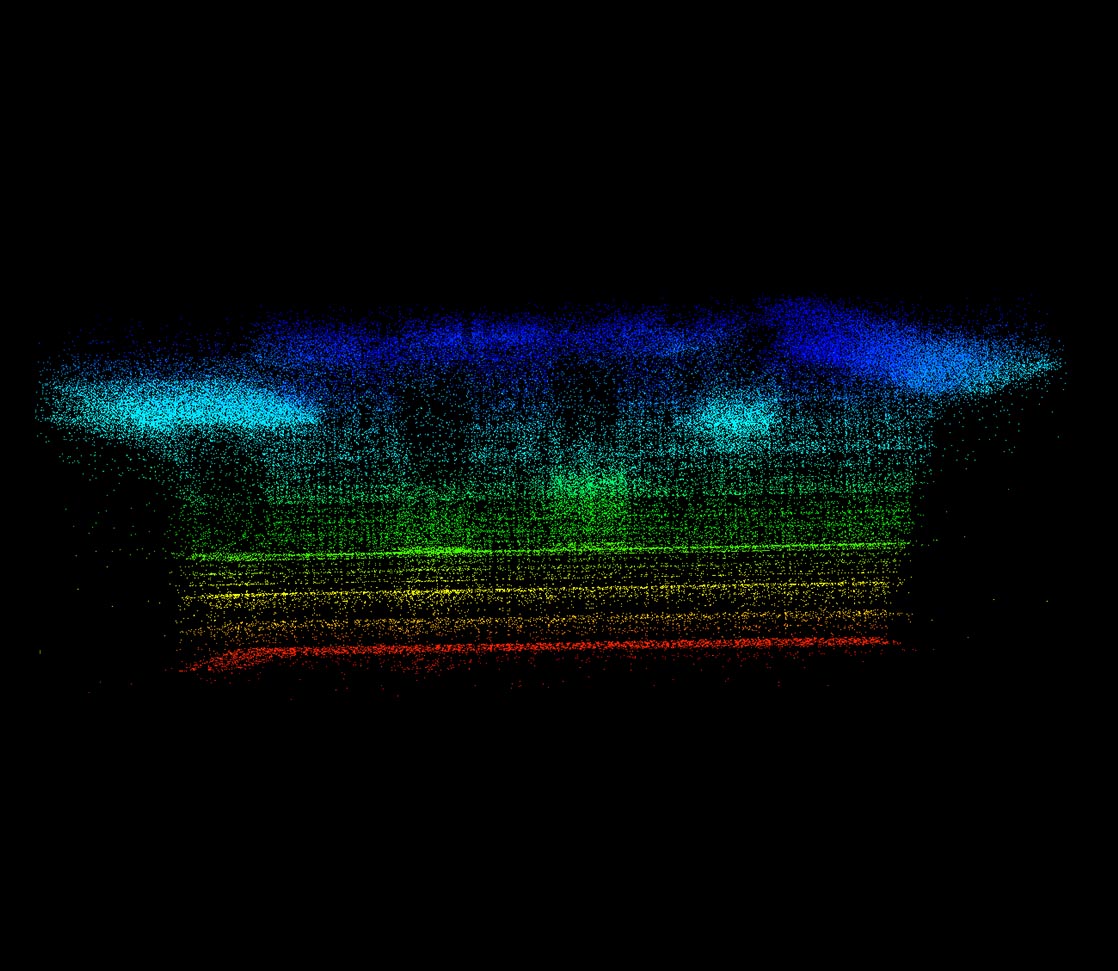

Our method is well structured and can be divided in some steps. To estimate the depth of a scene, we need a set of points, which we obtain using the SIFT descriptor (almost 400,000 points). Then, we search for correspondences for each point of the obtained set, using a scaled value of the sum of absolute difference (SAD) between two windows of pixels, in order to backtrace the correspondences (COMSAC) and find the depth of the point, resulting in a sparse depth map. Then, we expand the sparse depth map using a random growing, which results in the final dense depth map.

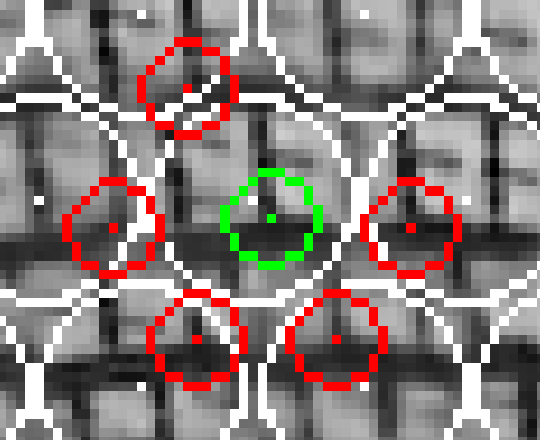

As we can see, the SIFT descriptor produced good results, as we obtained a good trade-off between the amount of points and their location.

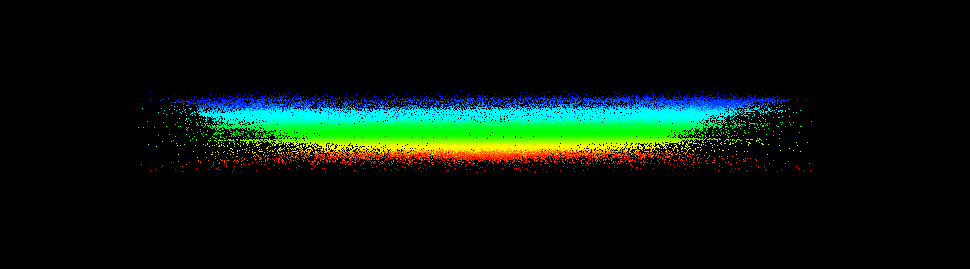

The scaled SAD also produced satisfactory results. The results were better in the most textured areas, as it is easy to find for correspondences in that areas, however, less detailed areas also produced fine results. To be able to apply the COMSAC method, we only considered points from the set with two or more correspondences, which we present in the following images.

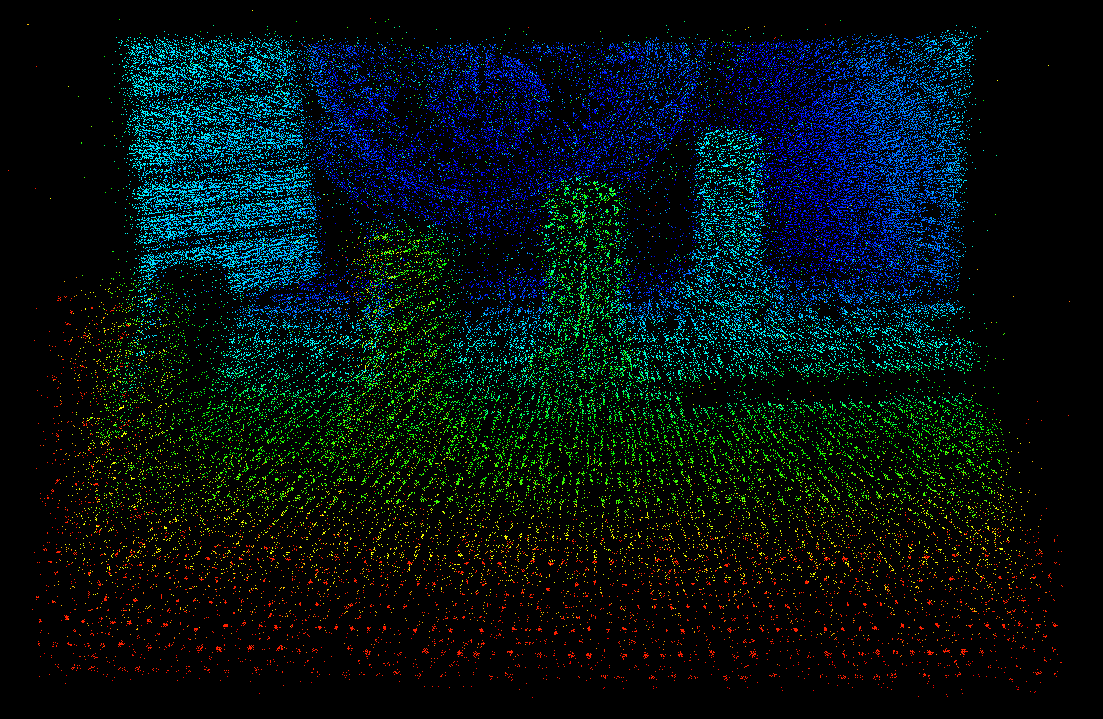

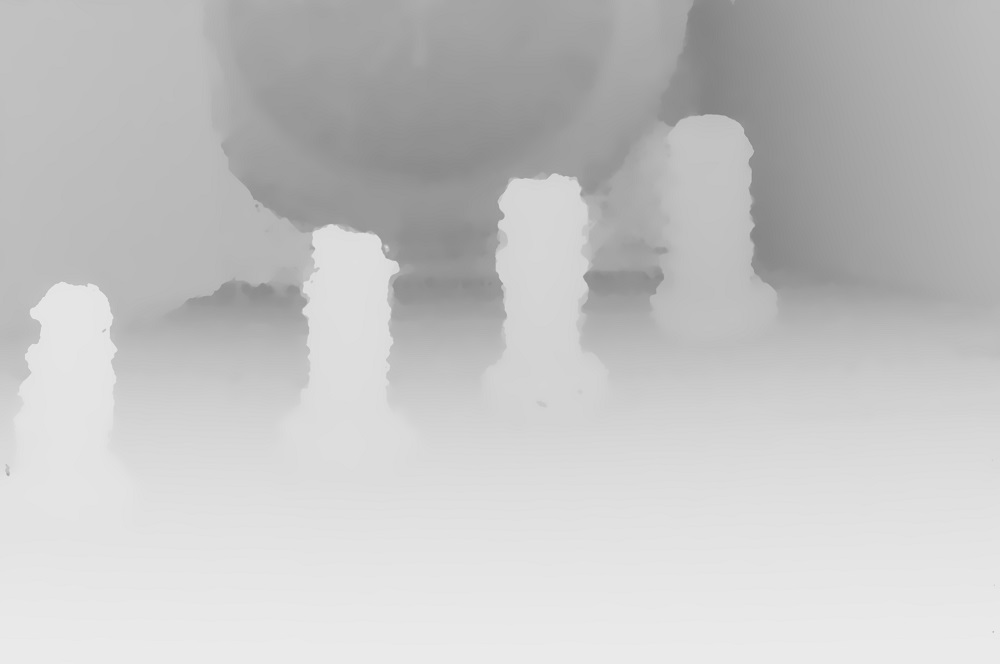

After finding the correspondences, we apply the COMSAC method to obtain robust results and estimate the depth. As we can see, the obtained results are good, as we can clearly perceive some of the depth. The difference between our sparse results and the respective points in the Raytrix image is 11%, which is good.

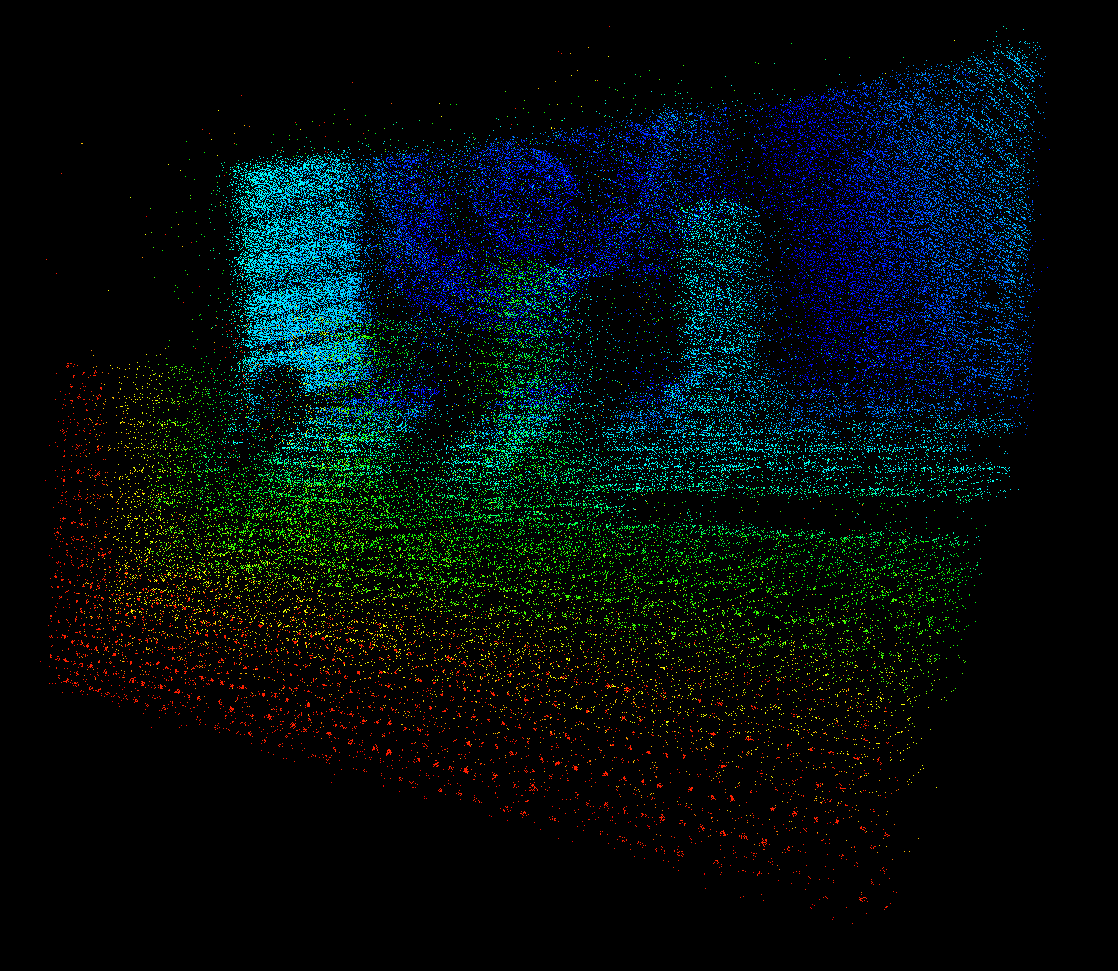

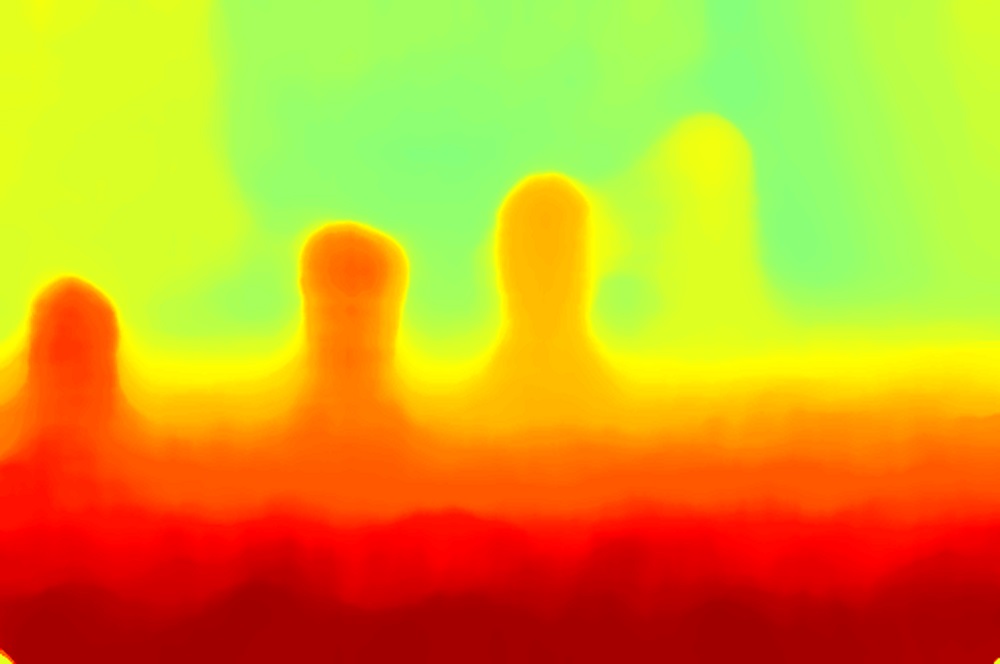

Having a sparse depth map, we need to make it dense, by applying our random growing method. Our method, despite having an high computational time, produced good results similar to the reference ones.

Doing a brief analysis to the obtained results, despite some differences in the intensities, we can perceive the similarities, as zones with the same depth have the same intensity in both images, making our results consistent. The high computational time is the main setback of this method, and the method itself can be improved too, as different approaches on growing sparse sets of points can be applied.

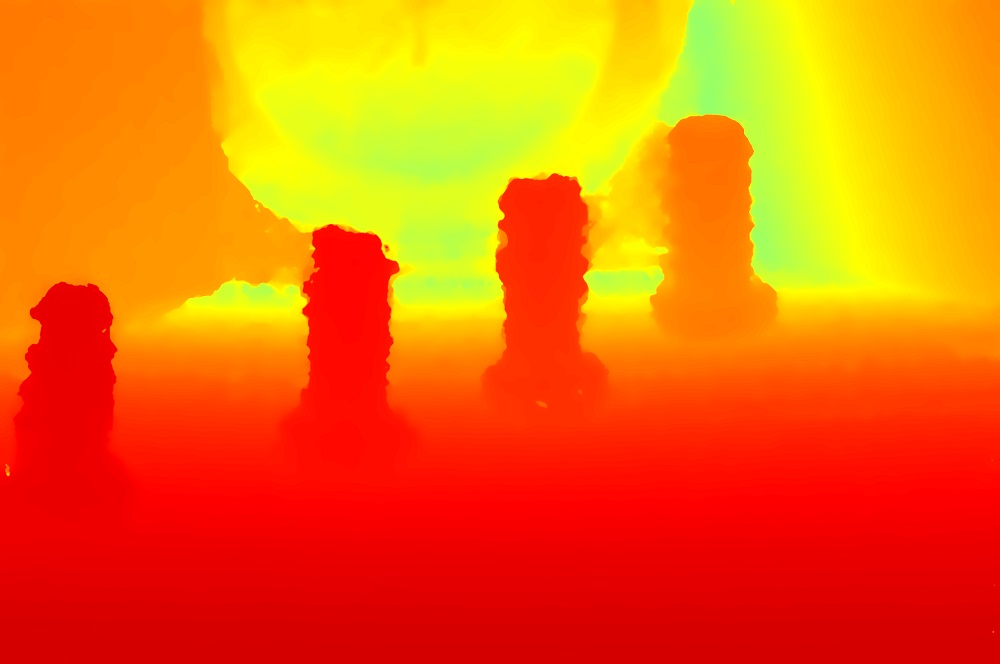

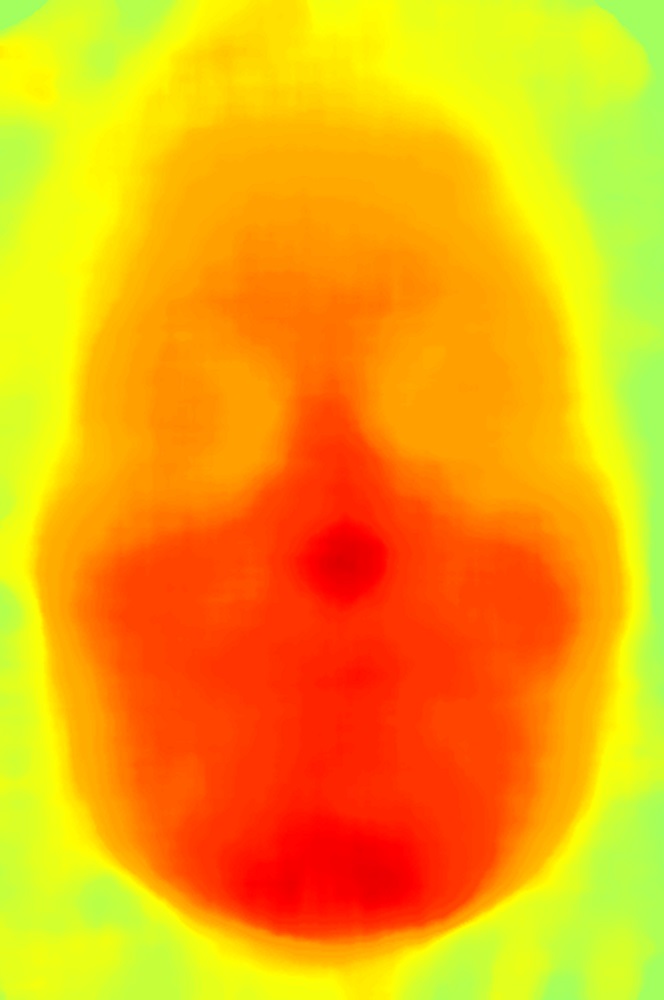

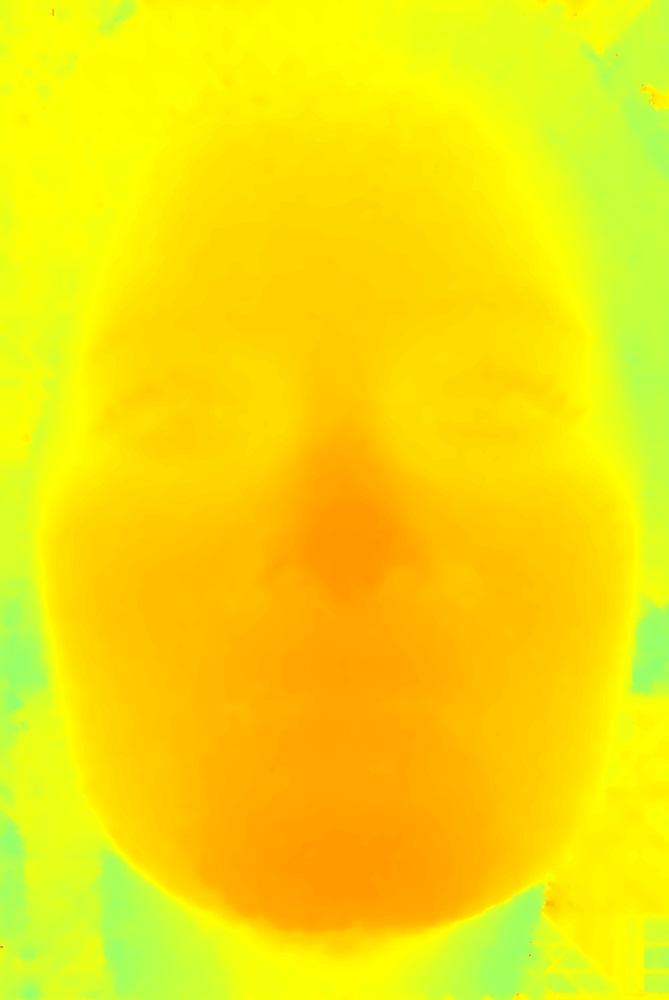

To test the consistency of our method, as said before, we applied our algorithms to another dataset (Andrea dataset), which is composed by a face capture. It is a less detailed image, so, it should be a challenging dataset to our method. The results are presented in the same order as the previous dataset with a brief analysis in the end.

Analyzing the obtained results, we can see the similarities between the results. As happened in the previous dataset, the intensities in both images are not equal, however, we can notice the consistency on the results, as zones with the same depth have the same intensity. Also, if we trace a vertical axis, we can see the similarities in both sides of the image, which leads us to believe in the consistency of our method. However, and as stated before, we intend to test our algorithms with some calibrated images of our own. This time, we got a difference of 8% between our results and Raytrix ones, which is good, considering this is a challenging dataset. In summary, we can consider that our results are good and the method is consistent, however, it can and will be improved.

For furher information, download the full document here.